This is real take-away of my last few posts; Hunting for suspicious domains, Hunting for Threats like a Quant and Predicting Attacks. In these posts we used Python, SKLearn and some simple machine learning techniques that give hunters a statistical edge when protecting their network. With these modules alone, it's trivial to incorporate them into your current python frameworks, or command-line bag-o-tricks. You're not a python shop? What if your tools are spread out among your team, infrastructure or multiple vendors? How do you integrate these simple, yet effective processes into your normal day to day?

Simple, stand up a Microservice. Sounds sexier than it really is though. Microservices are simple [usually] REST based HTTP interfaces, who's "front end social contract with the users" (eg: parameters, return values, etc) rarely change. They're also network based, which means now you are free to expose your Python specific magic to other languages that may not do machine learning magic so well (perl, ruby, C, etc).

Agility, Where it Makes Sense...

It also means, you are able to upgrade, tweak, enhance your machine-learning magic week to week as the bad guys adapt. This all without having to update all your tools. Simply rollout the new version of your python modules, restart the web services and things keep humming along. If something breaks, just roll back to the previous version.

Have a monolithic application for performance reasons? No problem, just use the libraries inline. It's not a python application? Call the command-line version of the module and capture the STDOUT. There are many ways to take advantage of these libraries, but for ease of deployment we're going to focus on the simplest and most modular: standing these up as a single, simple, documented microservice.

Threat Platforms as Microservices

Over the years, as we've observed how people use CIF, more times than not they're using IT as a microservice itself. That's part of the reason CIF isn't a car boat. Most of our users are trying to simply normalize data, and query or feed it into another service. Sometimes this is programmatically, other times it's calling a command-line "tool" from the right-click menu of something like ArcSight or Splunk. They're looking to seed the context of their feeds into their network devices or SEM.

In-fact, to make things simpler, I created a Python package for it. With a couple of system level dependencies (mainly GeoIP for the IP address features), pip install takes care of the rest. The caveat here, we're trying to standardize around Python3 (3.6 to be exact). Mainly because of its handling of unicode and ability to parse raw email, something noted in the embedded email parsing API of this prediction service, but still in a alpha like state.

This is very similar to the API that backends the [somewhat hidden] https://csirtg.io prediction API. There aren't a lot of great machine learning tools for Ruby. There are some ported SKLearn like things (and odd hacks to get SKLearn to work in ruby), but, being an ex-gentoo person, there's only so many things I want to compile to get stuff working. Since csirtg.io is a rails application, a flask based microservice was the only feasible way to go. Also, for distributed performance reasons, CSIRTG is majestic monolith. For things like predictions, it made sense that the balance was abstracting this logic out into a separate service.

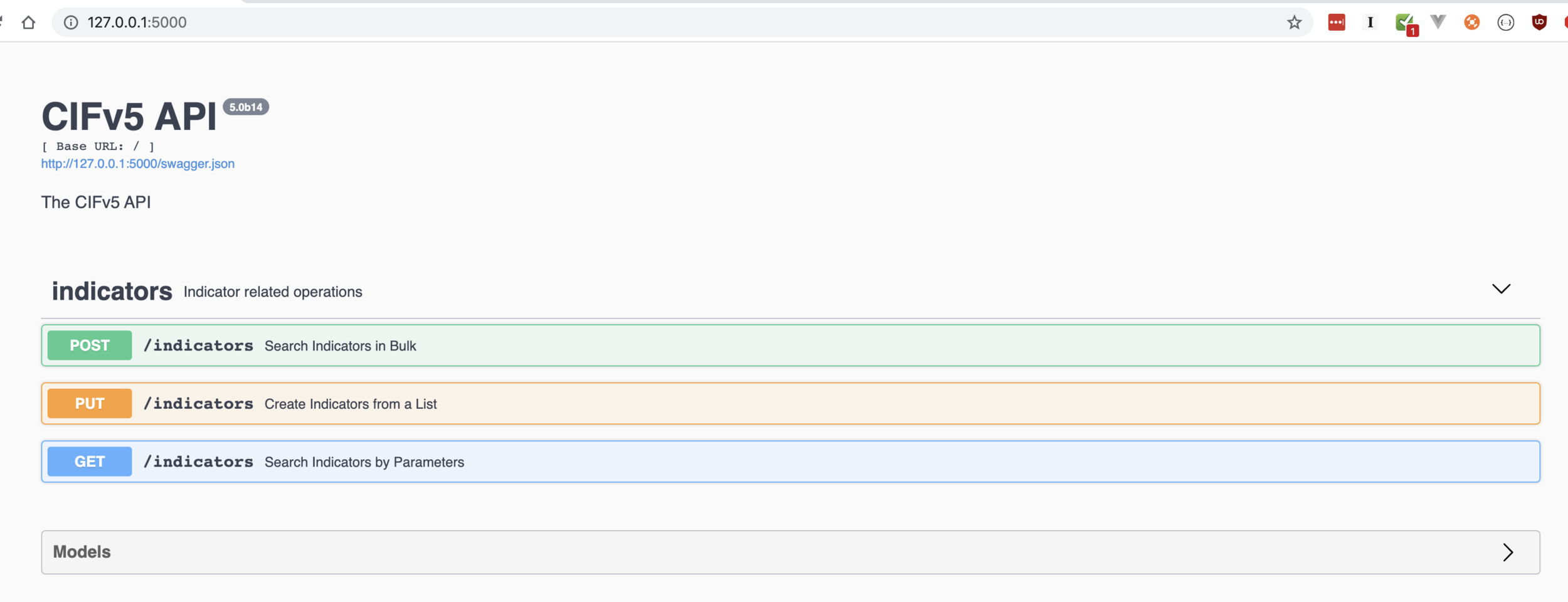

For this module we're using both Flask and Fask-RESTPLUS, which is a fantastic module for building API only interfaces that are auto-documented via swagger out of the box. Each of the APIs follow a similar pattern making them easy to follow, document and extend. As you build or add other interfaces to your API, with the right tweaks they show up and are able to be tested by users via the default route. The less curl fiddling your users have to do to understand the API, the faster they'll be able to run with your framework.

Unfortunately, until things like Bro and Snort are able to bridge their network pattern matching engines with SKLearn models (hrmm, smells like a good idea for some funded research?). So, we're still stuck with moving real data around. That's not to suggest they can't do REST based lookups over the network, or build some of these libraries into their frameworks. These lookups tend to be a bit slower than what we'd likely accept as normal. When you're monitoring 10-100GB / sec, network latency matters. The more of those decisions you can distribute to the edge, the better.

Predicting the predictions of ... predictions..

“I very frequently get the question: ‘What’s going to change in the next 10 years?’ And that is a very interesting question; it’s a very common one. I almost never get the question: ‘What’s not going to change in the next 10 years?’ And I submit to you that that second question is actually the more important of the two — because you can build a business strategy around the things that are stable in time. … [I]n our retail business, we know that customers want low prices, and I know that’s going to be true 10 years from now. They want fast delivery; they want vast selection. It’s impossible to imagine a future 10 years from now where a customer comes up and says, ‘Jeff I love Amazon; I just wish the prices were a little higher,’ [or] ‘I love Amazon; I just wish you’d deliver a little more slowly.’ Impossible. And so the effort we put into those things, spinning those things up, we know the energy we put into it today will still be paying off dividends for our customers 10 years from now. When you have something that you know is true, even over the long term, you can afford to put a lot of energy into it.”

Ten years from now, it wouldn't surprise me if, instead of trading threat intel, we were trading data models (reads: 'glorified patterns and/or TTPs). It also wouldn't surprise me if the need for traditional threat intel platforms, went away, because those models are either baked into the detection frameworks and/or more easily accessible over the network.

Invest and focus in the things that aren't likely to change in 10 years. Build the scaffolding first, focus on the contracts between your teams, components, and architecture. Then spend your cycles iterating the internals of each. Give others a chance to learn, test, trust and incorporate your contracts while you iterate on it's perfection.

Did I mention this comes as a docker app too? By ten minutes, I really meant 30 seconds.. YMMV :)

$ docker run -p 5000:5000 csirtgadgets/csirtg-predictd:latest