We had a funny thread pop up on the cif-users list this week. The punchline was:

“We have evidence from vendors that it can take them less than a day to get a TAXII server stood up and ingesting threat intel from scratch”

It's 2018-

Let that sink in for a moment, "we have evidence from vendors..." and "less than a day". Of course, as I write this the US government is in total "SHUTDOWN" mode, so i guess less than a day is better than less than a month.. or less than a year. BUT LESS THAN A DAY!? AND THAT'S A FEATURE? (i get to PAY UP for?). Whether you like or dislike STIX or TAXII is irrelevant, I have my own personal opinions about them but i'll try to keep this as neutral as I can. If you work in the government space, and or have a team of developers- it maybe the right solution for the problem you're trying to solve- For the rest of us, ... nope.

CIFv2 deployment [as nightmarish as it was] took ~20min to install, using the easybutton, from start to finish. IT WAS A TOTAL HACKJOB of poorly written shell scripts deploying an even more obnoxious PERL + ELASTICSEARCH application.. BUT, it worked... (did I mention it was 100% automated?). THAT, was ~2013 (ironically enough, I think the govt shutdown that year too- I digress..). We were still loading up VM's the hard way (click yes, I want 20GB of disk, etc..), but once you got past that- it was ~20min.

What did we learn?

If you run an open-source project, you have no time to spend on testing deployments- so you AUTOMATE ALL THE THINGS, from testing to install, across as many platforms as you possibly can.. because if you give folks documentation, they will not read it, but if you give them an easybutton- they'll BASH THE HELL OUT OF IT. What you quickly figure out- is how many different ways they'll then want to bend, tweak and scale out your application. This leads to more questions, more answers, more time (did I mention you're not really making any money from this, it's all goodwill... you learn a lot, but you also lose a lot of time with your family... depending on your situation, maybe good, maybe bad).

Also, if you want to then test all those different combinations, you need to automate more (eg: you should be testing your deployments across Ubuntu, CentOS and RHEL). If you don't wanna make your testing process take ~20min for each test (this assumes NOTHING is broken in your test and you don't have to do ~20min tests 6 times), you make your install processes... faster. You also start pushing a lot of your 'longer term tests' (eg: lower level modules) upstream, so you're not wasting precious test cycles on testing the SAME THING THAT PASSED THE TESTS JUST ABOUT 99.9999% of the time.

What you end up with, is a set of helpers (THANK YOU VAGRANT, TRAVIS, VBOX!!!):

or "test easybuttons" that spin up and spin down VM's, across different platforms fairly quickly (and run a bunch of tests that make sure the OS did what it was supposed to) and actual data came out when you typed the right search commands. Obviously, every-time you hit the MERGE button in github, there's a series of function tests there, but thats really just a sanity check, or 'did this compile'. The real pain point has always been "well it works on Ubuntu14 but not 16" or "it doesn't work on RHEL7??". That part used to take us days and weeks to test across, now it's ... hours [if we're having a bad day]?

So what's the point?

TL;DR

There's a famous saying; 'want things done faster? lop a few zeros off the budget!'

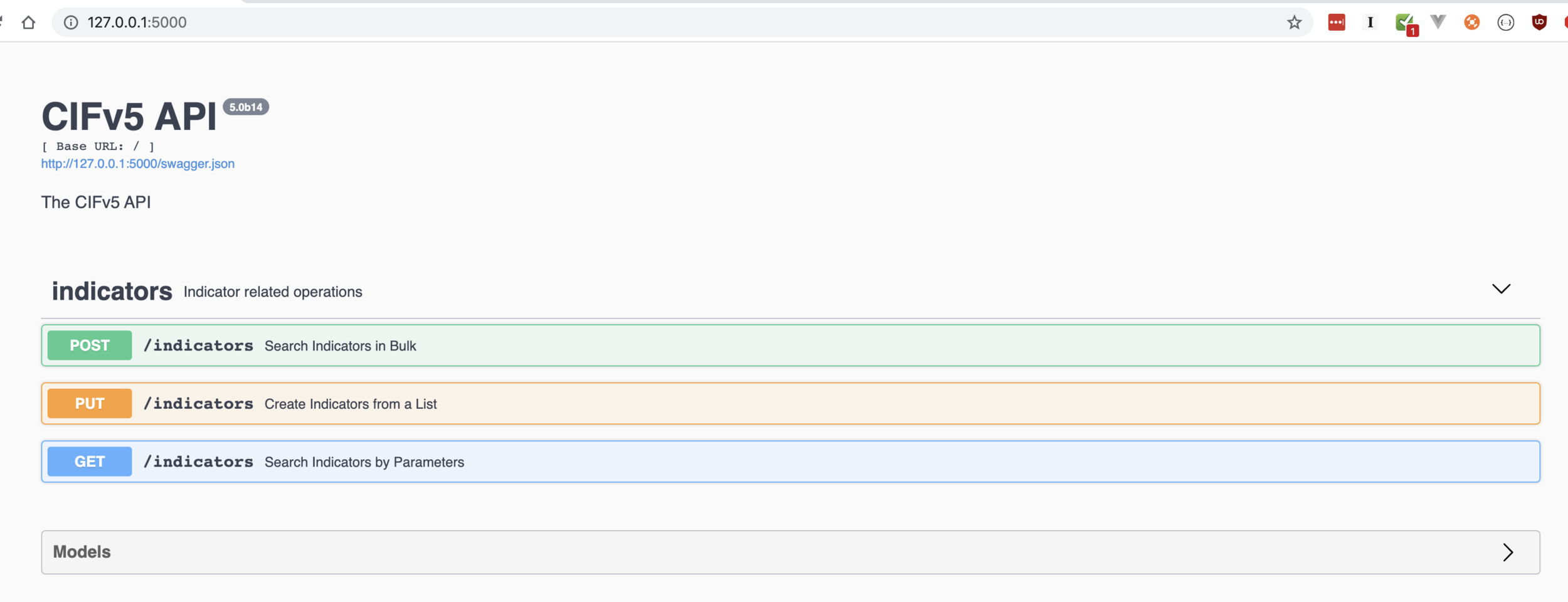

An 'out-of-the-box' CIFv3 install takes ~10min.

... and most of that is getting all the OS bits up to date. CIF itself is really just a simple python package, configuring it takes ... 2 of the 10min? Takes longer to run out for a cup of coffee.

When you're running an open-source project, you probably do not have a lot of resources at your disposal- in fact, i'd bet more than 84% of the projects out there are 1-3 person teams.. at best. If you don't automate the process, start to finish- nobody will read your doc, or use your project. Either you automate- or someone else does. Nobody uses your project, you spend your time on advertising "how it only takes a day" rather than iterating the core code itself. The less time you spend on the core- the less features you have, the less features you have, the less feedback you get- and so on. The faster you can get from "this is a bug" to .. "we just cut a release" (facebook in 2012!), the more the process will begin to feed on itself, the more lessons learned, the more features, the more solving problems.

In the Real World

We are HEAVY AWS and Ansible users. For our production deployments of CIF, we use Ansible to both spin up EC2 infrastructure (load-balancers all the way through to DNS settings in Route53) and to configure the various nodes of the application using almost the exact same code as the default easybutton. The subtle difference is we split our infrastructure up into a few pieces:

- 3 t2.medium cif-routers (handles REST and ZeroMQ traffic, uses AWS AutoScaling groups based on CPU utilization)

- 4 t2.medium elasticsearch data nodes

- 2 t2.small elasticsearch master nodes

- 1 elasticsearch load balancer (don't write me, i already know...)

- 1 t2.medium csirtg-smrt box

The result?

- This forces you to write down the configuration of your infrastructure and test up front, meaning less errors in the long run (eg: it forces you to ACTUALLY THINK ABOUT THE THING YOU ARE BUILDING)

- You're infrastructure is now 100% documented and 100% tested

- You can re-configure and re-test in ~30min or less over.. and over... and over... until you get it right (the app just runs ... for years... with very little babysitting and scales with your customers needs)

- At actual deployment time, you'll have confidence in the system more quickly, meaning faster, less error prone rollouts.

While our testing process isn't perfect and we still end up with random "hey, this deployment failed" from to time, the process is only getting faster and more efficient. Remember how "we push releases quickly"? Now you can roll those [or test and roll those out] just as quickly. It was built by a small agile team and it's targeted at those of us that wear many hats and may only have ~10min to spare in any given day. Sometimes, err most of the time- that's the difference between consuming, deploying and sharing threat intel- and not.

Does that mean CIF is right for you? Dunno, every organization is different. What I will say- in ~10min or less you get free access to over a decades worth of lessons learned, based on trial and error from users all over the world. Everything from consumption to deployment and detection in realtime, all for the amount of time it takes to grab a quick cup of coffee. :)