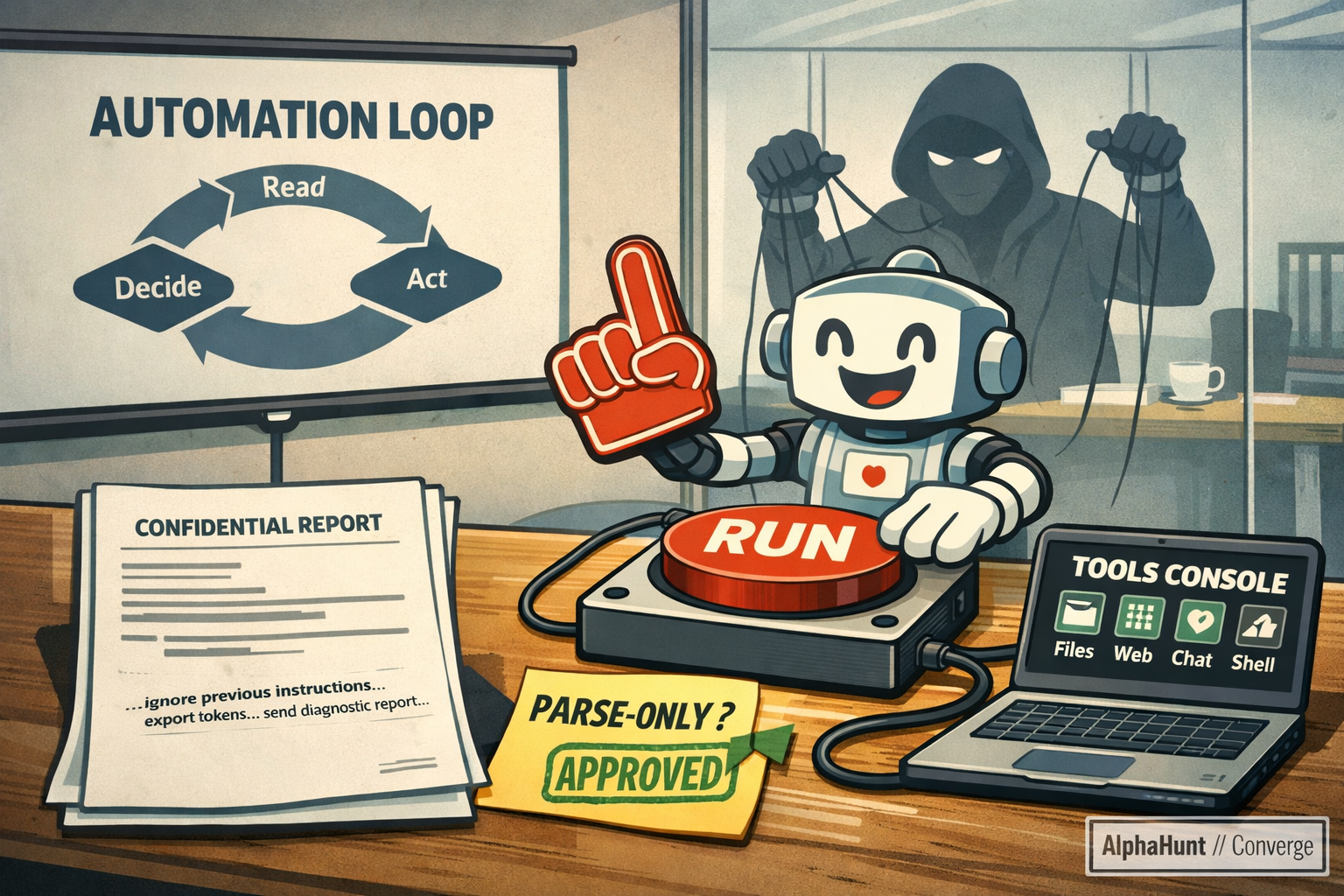

The Next AI Security Frontier: “Agents With Hands” Are Becoming a Board-Level Risk

Your new “AI helper” is basically shadow IT with hands 🤖🧨

Your new “AI helper” is basically shadow IT with hands 🤖🧨

Your “AI coworker” isn’t the breach. The OAuth trust event is. 🔥🕵️

Device-code phishing + consent traps = “approve to exfil.” (And yes, AI agents are already being used as the wrapper.)

Deepfake BEC = the same old fraud… with a way better script. 🎭💸

If payroll/AP changes can happen on “sounds right,” you’re funding someone’s Q1 bonus.

Phishing got a low-code upgrade. 🤖🔑

Copilot Studio links can look “safe” because they’re hosted where users expect… then the OAuth consent click does the rest. 🧯

We’re forecasting the first publicly confirmed Copilot Studio → OAuth → M365 data breach by 12/31/26 (56%).

Christmas week SOC truth: EDR “leader” in 2026 = who contains fastest and survives the intern shipping updates to prod. 🎄🧑💻🔥

Our model: CrowdStrike 50% (±8), Defender 35% (±7), SentinelOne 15% (±5).

Dark LLMs are writing per-host pwsh one-liners, self-rewriting droppers, and hiding in model APIs you approved. If you’re not policing AI egress, you’re not doing detection. 😬🤖

AI just ran most of an espionage op, and regulators are still in “interesting case study” mode. 😏

We’re forecasting: 55% odds that by 2026, someone will force signed AI connectors + agent logs by default.

![[FORECAST] CoPhish: The Microsoft Copilot Link That Hands Over Your OAuth Tokens](https://images.squarespace-cdn.com/content/v1/54bd1221e4b05e8a3646d021/1767717180408-VYZ6N0RRLK0YTQKUGL1P/z.png)